Home

Quiver is a distributed graph learning library for PyTorch Geometric (PyG). The goal of Quiver is to make large-scale distributed graph learning fast and easy to use.

Why Quiver?

The primary motivation for this project is to make it easy to take a PyG script and scale it across many GPUs and CPUs. A typical scenario is: Quiver users can leverage the high-level APIs and rich examples of PyG to design graph learning algorithms, and then use Quiver to scale PyG algorithms to run at large scale. To make scaling efficient, Quiver has several features:

-

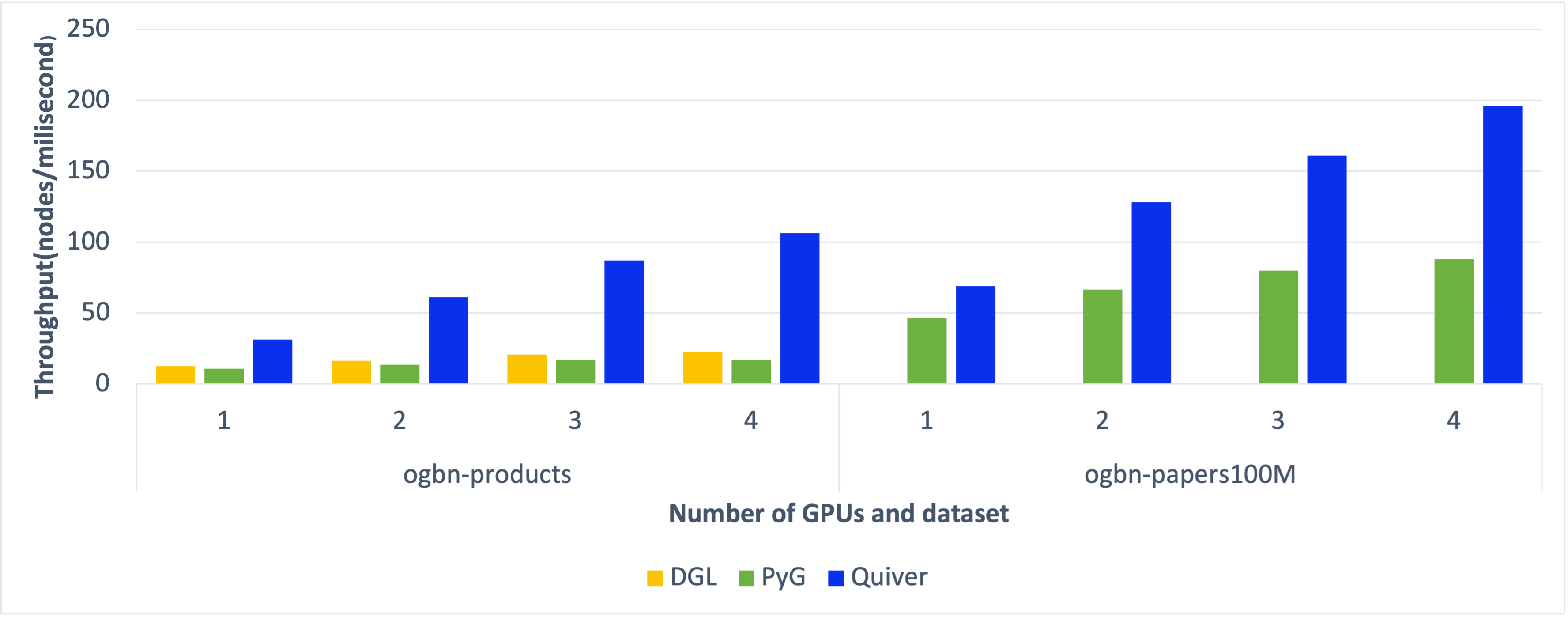

High performance: Quiver enables GPUs to be efficiently used in accelerating performance-critical graph learning tasks: graph sampling, feature collection and data-parallel training. Quiver thus can out-perform PyG and DGL even with a single GPU, especially when processing large-scale datasets and models.

-

High scalability: Quiver can achieve (super) linear scalability in distributed graph learning. This is contributed by Quiver's novel communication-efficient data/processor management techniques and effective usage of fast networking technologies (e.g., NVLink and RDMA).

- Easy to use: Quiver requires only a few new lines of code in existing PyG programs and it has no external heavy dependency. Quiver is thus easy to be adopted by PyG users and integrated into production clusters.

Below is a chart that describes a benchmark that evaluates the performance of Quiver, PyG (2.0.1) and DGL (0.7.0) on a 4-GPU server that runs the Open Graph Benchmark.

We will add multi-node result soon.

For system design details, see Quiver's design overview (Chinese version: 设计简介).

Install

Pip Install

To install Quiver:

1. Install Pytorch

2. Install PyG

3. Install the Quiver pip package

$ pip install torch-quiver

We have tested Quiver with the following setup:

- OS: Ubuntu 18.04, Ubuntu 20.04

- CUDA: 10.2, 11.1

- GPU: P100, V100, Titan X, A6000

Test Install

You can download Quiver's examples to test installation:

$ git clone git@github.com:quiver-team/torch-quiver.git && cd torch-quiver

$ python3 examples/pyg/reddit_quiver.py

A successful run should contain the following line:

Epoch xx, Loss: xx.yy, Approx. Train: xx.yy

Install from source

To build Quiver from source:

$ git clone git@github.com:quiver-team/torch-quiver.git && cd torch-quiver

$ sh ./install.sh

Use Quiver with Docker

Docker is the simplest way to use Quiver. Check the guide for details.

Quick Start

To use Quiver, you need to replace PyG's graph sampler and feature collector with quiver.Sampler and quiver.Feature. The replacement usually requires only a few changes in existing PyG programs.

Use Quiver in Single-GPU PyG Scripts

Only three steps are required to enable Quiver in a single-GPU PyG script:

import quiver

...

## Step 1: Replace PyG graph sampler

# train_loader = NeighborSampler(data.edge_index, ...) # Comment out PyG sampler

train_loader = torch.utils.data.DataLoader(train_idx) # Quiver: PyTorch Dataloader

quiver_sampler = quiver.pyg.GraphSageSampler(quiver.CSRTopo(data.edge_index), sizes=[25, 10]) # Quiver: Graph sampler

...

## Step 2: Replace PyG feature collectors

# feature = data.x.to(device) # Comment out PyG feature collector

quiver_feature = quiver.Feature(rank=0, device_list=[0]).from_cpu_tensor(data.x) # Quiver: Feature collector

...

## Step 3: Train PyG models with Quiver

# for batch_size, n_id, adjs in train_loader: # Comment out PyG training loop

for seeds in train_loader:

n_id, batch_size, adjs = quiver_sampler.sample(seeds) # Use Quiver graph sampler

batch_feature = quiver_feature[n_id] # Use Quiver feature collector

...

...

Use Quiver in Multi-GPU PyG Scripts

To use Quiver in multi-GPU PyG scripts, we can simply pass quiver.Feature and quiver.Sampler as arguments to the child processes launched in PyTorch's DDP training, as shown below:

import quiver

# PyG DDP function that trains GNN models

def ddp_train(rank, feature, sampler):

...

# Replace PyG graph sampler and feature collector with Quiver's alternatives

quiver_sampler = quiver.pyg.GraphSageSampler(...)

quiver_feature = quiver.Feature(...)

mp.spawn(

ddp_train,

args=(quiver_feature, quiver_sampler), # Pass Quiver components as arguments

nprocs=world_size,

join=True

)

A full multi-gpu Quiver example is here.

Run Quiver

Below is an example command that runs a Quiver's script examples/pyg/reddit_quiver.py:

$ python3 examples/pyg/reddit_quiver.py

The commands to run Quiver on single-GPU servers and multi-GPU servers are the same. We will provide multi-node examples soon.

Examples

We provide rich examples to show how to enable Quiver in real-world PyG scripts:

- Enabling Quiver in PyG's single-GPU examples: ogbn-product and reddit.

- Enabling Quiver in PyG's multi-GPU examples: ogbn-product and reddit.

Documentation

Quiver provides many parameters to optimise the performance of its graph samplers (e.g., GPU-local or CPU-GPU hybrid) and feature collectors (e.g., feature replication/sharding strategies). Check Documentation for details.

Community

We welcome new contributors to join the development of Quiver. Quiver is currently maintained by researchers from the University of Edinburgh, Imperial College London, Tsinghua University and University of Waterloo. The development of Quiver has received the support from Alibaba and Lambda Labs.